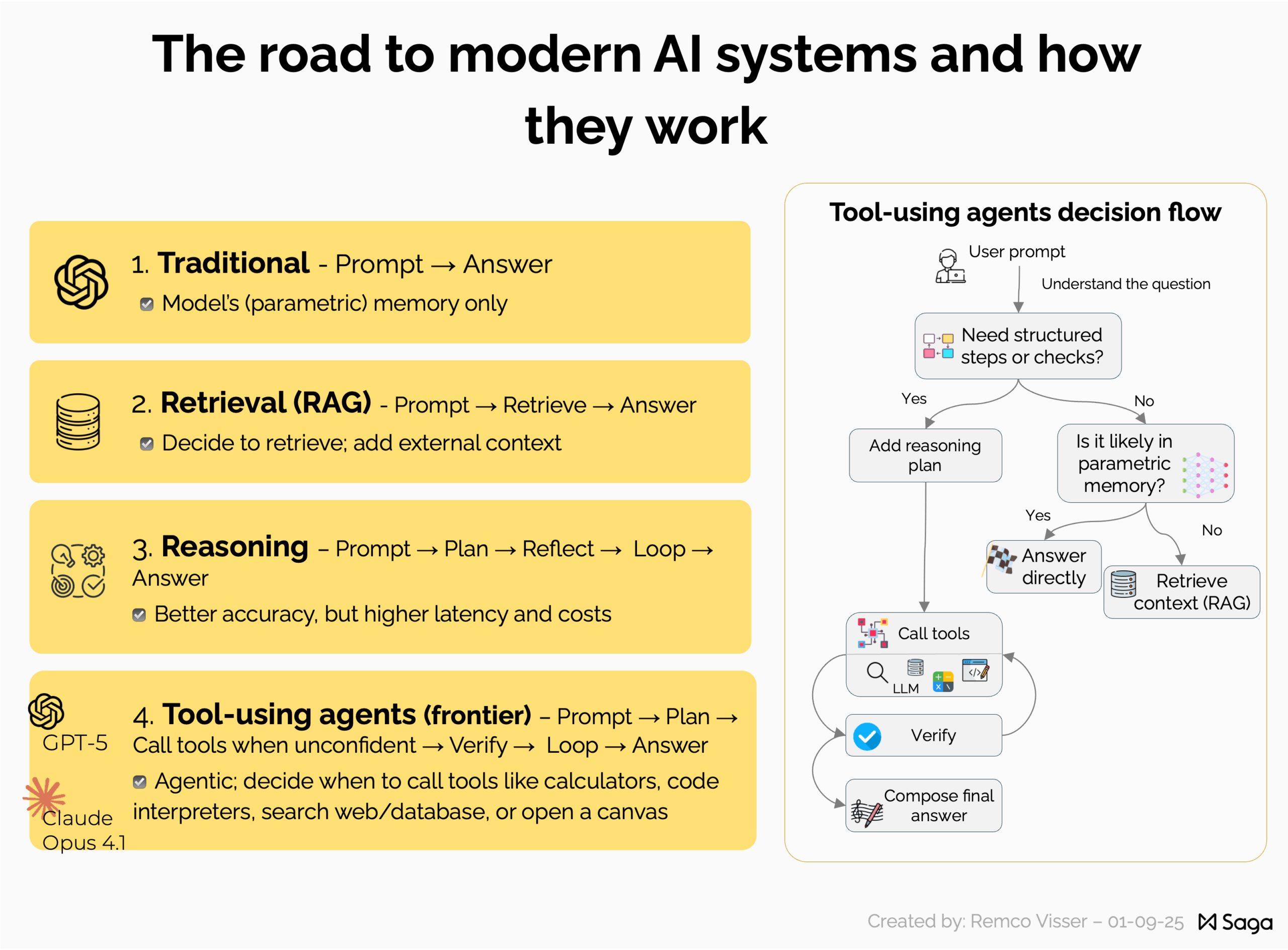

The road to modern AI systems and how they work

In addition to growing in the number of parameters and the amount of data they process, AI models are advancing in other important ways. Some modern AI systems no longer respond immediately to your question; they first decide whether to retrieve data, reason through a problem, conduct research, or use specialized tools – and in what order – before producing a final answer. Let’s dive into how AI models have changed over this relatively short period of time that feels like a lifetime.

1) The first Large Language Models

Traditional: Prompt → Answer

Early large language models (and plenty still in production) work like this:

- You give the model a prompt, and it predicts the most likely next word (token). That word is then added to the prompt, and the process repeats, predicting and appending each word until the model determines the response is complete.

- The knowledge/memory of the models is stored in parameters (parametric) from training (not like a database, but in billions of parameters).

2) The retrieval upgrade (RAG)

Retrieval Upgrade: Prompt → Retrieve context → Answer

Retrieval-Augmented Generation (RAG) adds a memory outside the model:

- Retrieve documents from a (vector) database, codebase, or a private corpus (this can be the public internet, but often it’s your documents)

- Add retrieved components/chunks to the model and generate an answer that cites or adheres to the retrieved context.

- In modern RAG, AI models can detect the need for retrieval. A small policy decides: “Do I trust my parametric memory, or should I look things up?”

Benefits:

- More recent, source-grounded outputs

- Reduced hallucinations, especially on niche content

Pitfalls:

- Garbage in, garbage out. Poor retrieval hurts answers.

3) Reasoning models

Reasoning: Prompt → Plan → Reflect → Loop → Answer

Models can now be guided to plan before answering. This is also called reasoning, test-time compute, inference, and thinking.

Key ideas:

- The system allocates extra steps to decompose the task, check sub-results, or try multiple paths and then vote/verify.

- Reflection: Let the model or a companion model critique and revise an initial draft.

Cost vs. quality:

- More steps mean better accuracy, but also higher latency and compute costs. Only use for more complex questions.

Tip:

- Explaining your own plan (chain-of-thought) to the reasoning model makes it less prone to hallucinations. This is also an excellent way of adding your domain expertise in the prompt.

4) The current frontier: Tool-using agents

Current frontier models (like GPT-5): Prompt → Plan → Call tools when a model is unconfident → Iterate until confident → Answer

Today’s best systems are agentic: they can decide to call external tools with structured inputs/outputs. Common tools:

- Calculators & solvers for error-free math or finance (LLM’s are not great in calculations)

- Code interpreters/sandboxes to run code, test hypotheses, transform data, or plot.

- Databases & vector stores for context retrieval, bigger databases, and RAG

- Search/browse for live info

- Create draft in drafting canvas

How it works under the hood:

- Planner chooses a sequence of steps (sometimes learns this implicitly). Your prompt is essential here, always give as much steps as possible to guide the model in the right direction, you should be orchestrating the AI, not the other way around.

- An Executor calls tools

- A Verifier checks outputs (range checks, unit tests, schema validation, or an ensemble)

- Loop through this scheme until confident enough

- Compose the final answer, often with citations, data tables, or visuals generated by tools.

Why this matters:

- Dramatically fewer hallucinations

- Auditable: you can inspect the line of thinking and tool calls (until AI models decide to use their own language instead of natural language…)

- Modular: you can swap tools without retraining the model

Conclusion

As models evolve, we’ll likely see even tighter integration between reasoning, retrieval, and external tools, moving toward systems that feel less like static models and more like dynamic collaborators.